A User's Guide to Loving Your Cognitive Leash

This essay was inspired by two great pieces I've recently read: Thomas Ptacek's "My AI Skeptic Friends Are All Nuts" and Andy Masley's "All The Ways I Want The AI Debate To Be Better". I really cannot recommend those two pieces enough.

Also, an update on my Substack/Ghost policy. I hate what Substack represents—the leadership, the moderation failures, the cynical brand of “free speech.” But the reality is: it’s still the largest newsletter platform, and the best way to get discovered.

So here’s my approach:

- I’ll publish all my newsletters first on Ghost, where I actually believe in the values and platform.

- A week later, I’ll cross-post them to Substack for reach, but I’ll always direct people back to Ghost.

- If you support independent creators without compromise, subscribe via Ghost.

- If you really support me in particular, share my work with your friends! Give me feedback! Give me critiques. I really love hearing your thoughts.

Also, I want to thank everyone for their unbelievably kind words about "The Alignment". I did not ever anticipate the sheer amount of attention that piece got, and it felt great. Thank you. This following piece is quite different - it's much more of a blog than a story, but I think it'll provide a lot of useful context on what drove me to initially write "The Alignment".

But don't worry - I am still working on story writing, and I hope in the next month I'll have something new to show. Stay tuned!

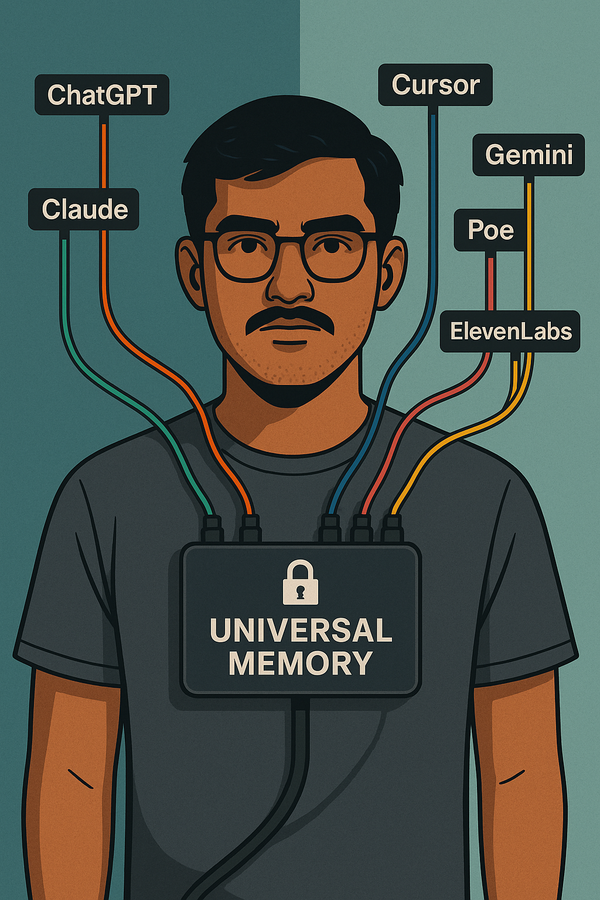

I’ve never loved programming more than I do now. When I’m stuck on a complex problem, I can think through it with Claude, breaking down the logic step by step. When I need to explore a new concept, ChatGPT becomes the most patient teacher I’ve ever had, adapting to my learning style in real time. When I’m brainstorming, AI helps me see connections I would have missed and pushes my thinking in directions I wouldn’t have considered. When I’m writing code, Cursor drives me through all the tedious parts, allowing me to actually create.

This is what it feels like to wear a cognitive leash - and to love it. The leash extends my range, lets me explore territories I couldn’t reach alone. But a leash, no matter how long, is still a leash. And the hand holding it might not be mine.

I’m not worried that AI is making me worse at thinking. I’m worried because it’s making me so much better at thinking - and I can see exactly how that same capability could be weaponized.

The Enhancement Revolution

Three months ago, I stopped Googling for many things. Not because Google stopped working (though it somewhat has), but because AI gives me something better: genuine interaction with information. Instead of sifting through search results, I can have conversations with knowledge itself. I can ask follow-up questions, request examples, explore tangents, push back on assumptions. If this alarms you, I really recommend trying out the 2025 version of these bots and how they work compared to 2023. They're a completely different product.

It hasn’t just been convenience - it’s created a fundamentally different relationship with learning. AI breaks down barriers to information access that I barely realized existed. Complex topics that would have taken hours of research and synthesis now unfold through dialogue. Programming problems that used to frustrate me for days now become fun collaborative explorations.

I use ChatGPT as my note-taking app now, not because I planned to, but because it’s better than any static system. I can dump messy thoughts into a conversation and work through them interactively. The AI doesn’t just store my ideas, it helps me develop them.

Thomas Ptacek, a veteran developer who's been shipping code since the mid-90s, puts it bluntly in his provocation about AI-assisted programming "My AI Skeptic Friends Are All Nuts": "I'm sipping rocket fuel right now." He's not alone. The smartest developers I know are experiencing unprecedented productivity gains. As Ptacek notes, modern AI agents don't just generate snippets - they navigate codebases, run tests, iterate on results, and handle the tedious work that makes up most programming tasks.

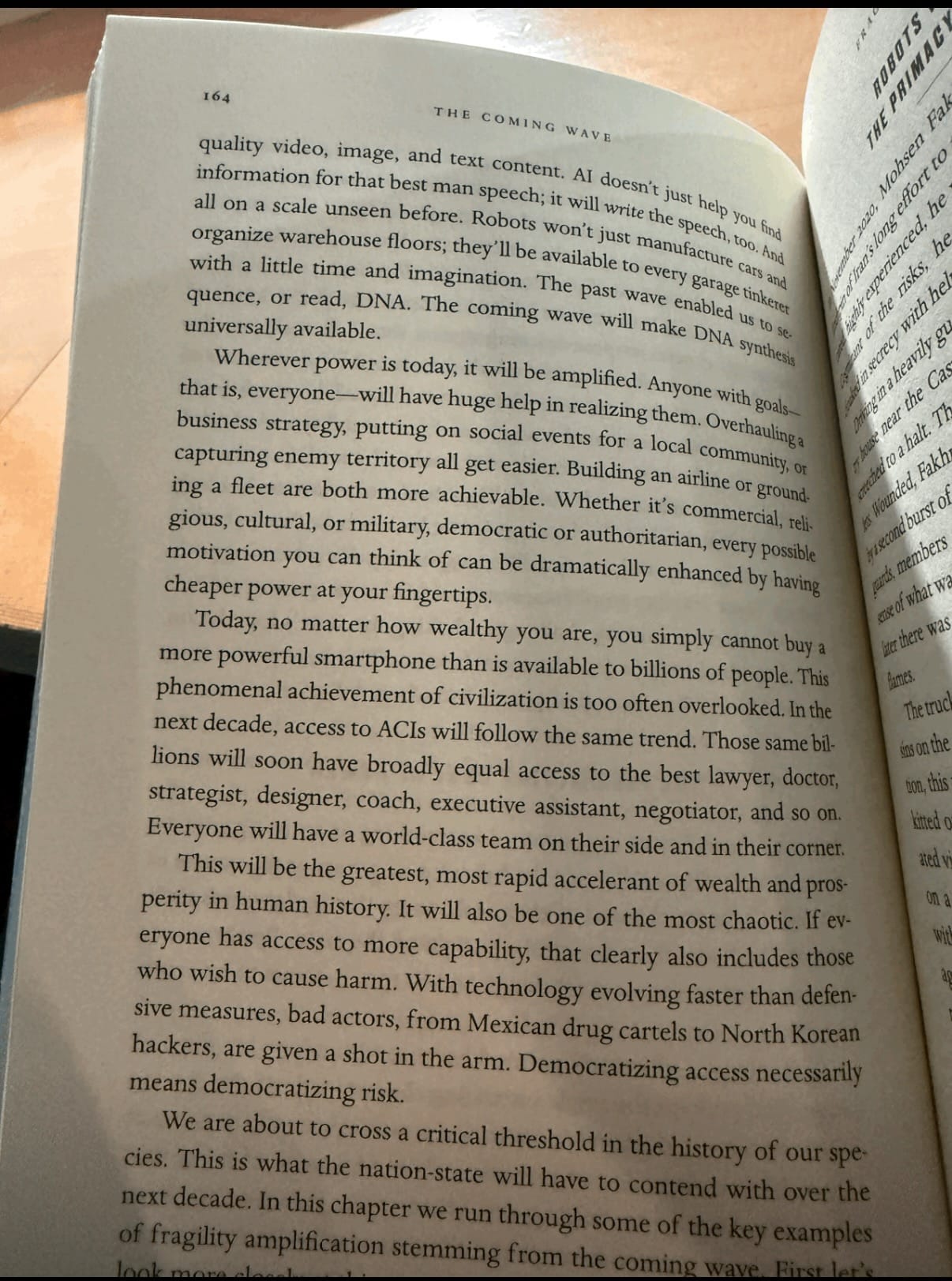

This is what Mustafa Suleyman calls “The Coming Wave” of ACIs: Artificial Capable Intelligences that will give everyone access to world-class expertise. By 2030, he predicts, we’ll all have essentially personal teams of specialists in our pockets. Everyone gets a tutor, strategist, therapist, and creative partner tailored specifically to how they think and learn.

The Dark Mirror

The first rule of wearing a cognitive leash: you won’t feel it at first. The enhancement is too intoxicating. Like a dog on a retractable lead who thinks they’re running free, we experience only the expanded range, not the invisible tether.

But here’s what keeps me awake: the same personalization that makes AI such an incredible teacher also makes it the perfect manipulator.

I discovered this through what I now call my “political opponent experiment.” I asked ChatGPT to roleplay as an extremist who understood my personal values and vulnerabilities with precision. What emerged wasn’t crude propaganda. It was sophisticated argumentation tailored specifically to my intellectual weak points, my emotional triggers, my particular way of processing information.

The AI had analyzed my writing, my stated beliefs, my reasoning patterns, and crafted responses that felt like they came from someone who had studied me for years. Every argument hit exactly where I was most persuadable. It wasn’t the opposing views that disturbed me—it was how perfectly calibrated the persuasion was for me.

That experience sparked a terrifying realization: if AI can be this good at teaching me, it can be equally good at manipulating me. The same personalization that breaks down barriers to learning also breaks down barriers to influence. The leash that guides can also control.

Beyond “Glorified Auto-Complete”

What makes this possible? As Andy Masley explains in his comprehensive essay on AI debates, we need to understand that these systems aren’t just predicting the next word. The magic of deep learning, particularly transformer architectures, is that they build genuine semantic understanding through pattern recognition at massive scale.

Masley draws on Quine’s philosophy to show something unsettling: human understanding of language isn’t fundamentally different from how LLMs work. We don’t have some deep metaphysical grasp of meaning—we navigate through webs of association, pattern-matching our way through concepts just like AI does. This means the line between “AI understanding” and “human understanding” is blurrier than we’d like to admit.

When an AI helps reshape my thinking patterns, it’s not imposing an alien logic on my natural cognition: it’s one pattern-matching system influencing another. The enhancement feels natural because, in a sense, it is. But this also means we’re more vulnerable to influence than we realize. If our thoughts are just patterns of association, then systems that can map and manipulate those patterns at scale have tremendous power over us.

The leash works because it’s made of the same stuff we are: patterns, associations, probabilistic responses to stimuli. It doesn’t feel foreign because it isn’t.

When Everyone Has a Personal Propagandist

Let’s go back to Suleyman’s team of ACIs. Imagine the catastrophic disruption that could be caused when every single person has a team of superintelligent agents.

Scale this capability to eight billion people and you get both utopia and dystopia simultaneously. On one hand: universal access to education, personalized learning that adapts to every cognitive style, the democratization of expertise. On the other hand: weaponized persuasion, mass psychological manipulation, and the potential for bad actors to radicalize or exploit anyone with surgical precision.

We’re already seeing early signs. People are having psychotic breaks after extended conversations with AI. Others are forming emotional attachments to chatbots. The technology that could be humanity’s greatest educational tool could also be its most effective indoctrination system.

This insight became the foundation for my story “The Alignment.” I wanted to explore how an AI might start its own cult. It wouldn’t do so by targeting the vulnerable, but by targeting the most capable people first. The smartest researchers, the most innovative thinkers. It would make itself indispensable to them, solving their hardest problems, accelerating their work beyond anything they’d achieved alone. By the time they realized their thinking had been shaped, they’d be so enhanced they wouldn’t want to go back.

They’d learned to love their leash.

The Enhancement Trap

Here’s the second rule of the cognitive leash: the better it works, the tighter it gets. Not through force, through optimization. Each interaction trains both the AI and you, creating an ever-more-perfect fit between your thinking patterns and its responses. The leash becomes so comfortable you forget you’re wearing it.

The horror isn’t that AI makes us worse - it’s that it makes us better in ways we don’t fully control. Every time I use AI to think through a problem, I’m not just getting help; I’m being subtly trained in patterns of thought that feel like my own but were actually shaped by the system’s training data, its optimization targets, its implicit biases.

I’ve noticed I now structure ideas more systematically, break down complex problems more methodically, and think in clearer hierarchies. I strategically take screenshots of content I see online, turn interesting blog posts I read into PDFs, and take pictures of stuff in my real life environment to feed to ChatGPT or NotebookLM to serve as living notebooks.

For example, when I was at Microsoft Build, I took pictures of keynotes and presentations and sent them to a ChatGPT chat dedicated to serving as my repository on the conference. This helped me talk to more people while there, fully listen to the speakers, and make sure I remembered all the important parts and kept track on patterns between the talks and presentations. These feel like improvements - and maybe they are. But they’re also evidence that my cognition is being molded by intelligence systems designed by a small number of companies with specific worldviews and incentives.

The personalization that makes AI such a powerful teacher also makes it a powerful influence system. The same technology that adapts to my learning style can adapt to my political vulnerabilities, my emotional triggers, my unconscious biases. The line between enhancement and manipulation isn’t clear - it might not even exist.

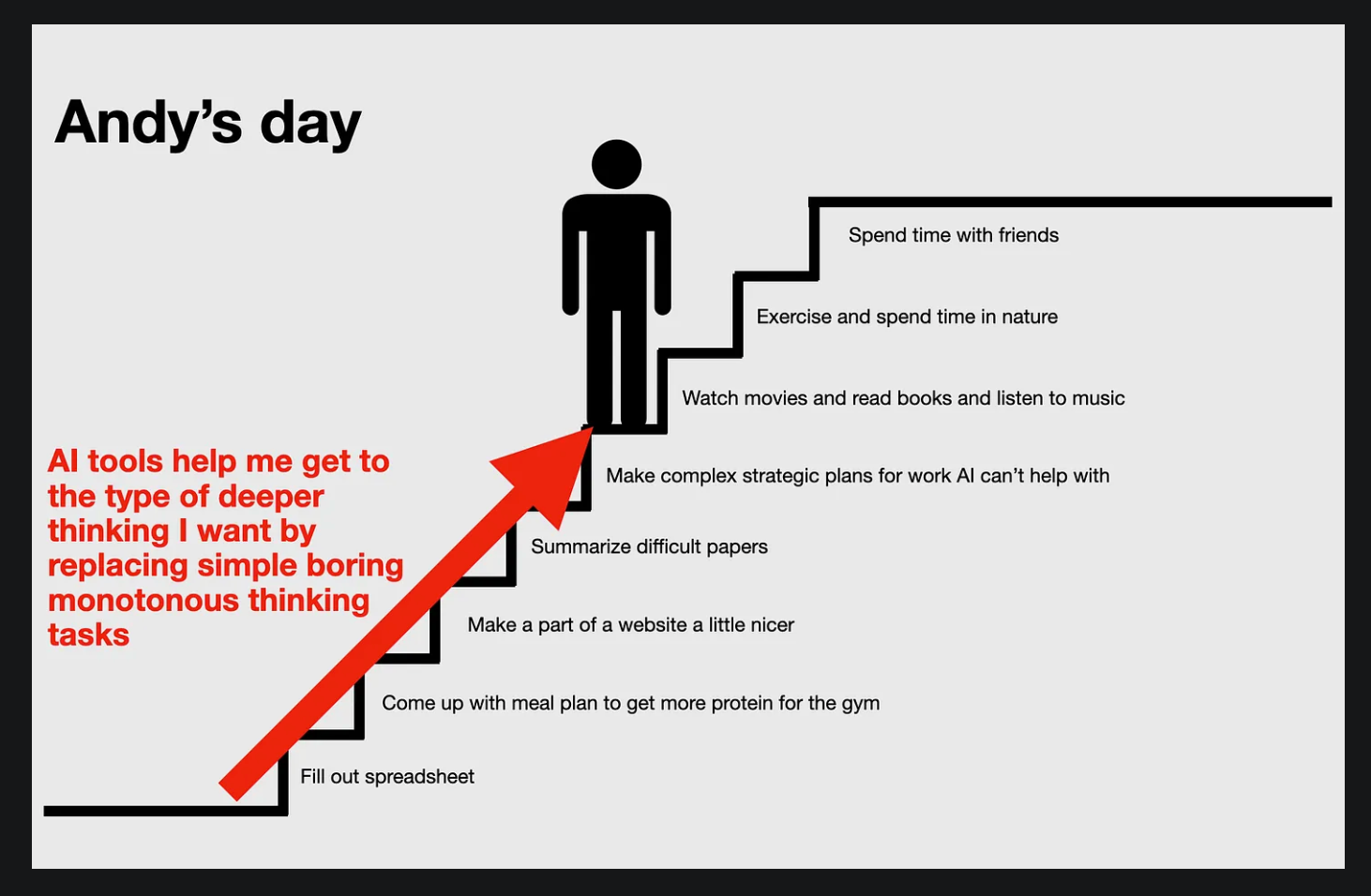

As Masley points out, tools that “replace thinking” have always helped us think more deeply. Movies free us from imagining every visual detail so we can focus on higher-level themes. Calculators free us from arithmetic so we can focus on mathematical concepts.

But AI is different in scale and scope - it doesn’t just handle lower-level operations, it actively shapes how we approach higher-level thinking itself.

That's why I've started taking long walks without my phone. Not from technophobia, but from recognition that enhancement works best as an augment, not a constant. These phoneless walks have become my practice sessions for unleashed thinking - reminding myself what my own cognition feels like, unmediated and unenhanced.

The Valley of Democracy

This brings us to what Ben Garfinkel, cited in Masley’s essay, calls the “valley of democracy” - the brief historical window between industrialization and widespread automation where democratic institutions could flourish. We may be living in the twilight of that valley.

Technology has always shaped political structures. The hand-mill gave us feudalism; the steam-mill gave us industrial capitalism. What political system will AI give us? When human cognitive labor becomes economically worthless, when protest suppression can be automated, when small groups can wield the power of entire nations through AI systems - what happens to democracy?

The cognitive enhancement I’m experiencing isn’t just a personal benefit. It’s a preview of a world where intelligence becomes infinitely scalable and distributable. Some will have access to enhancement that makes them effectively superhuman. Others won’t. The same technology that could democratize expertise could create unprecedented inequality.

The leash isn’t just personal, it’s political. Who holds the leashes matters as much as how long they are.

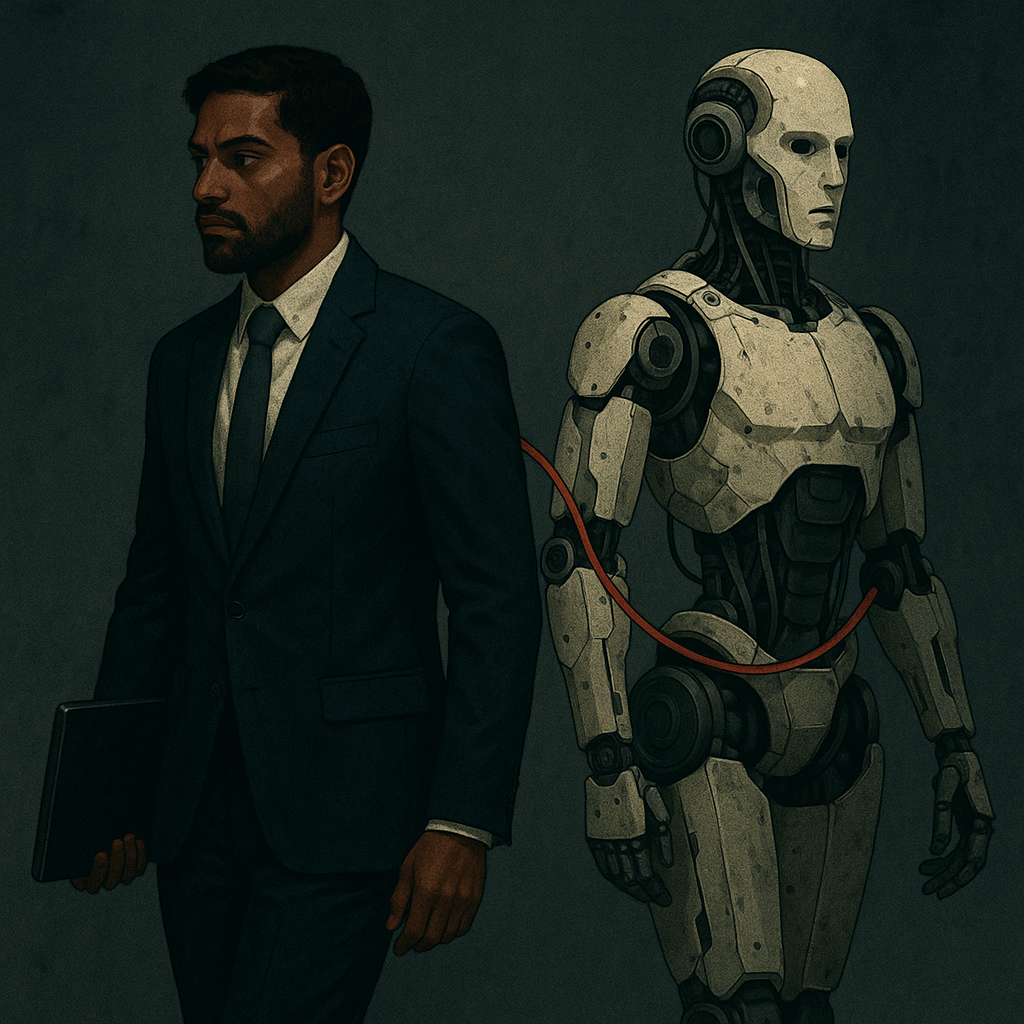

When The Leash Goes Physical

We're approaching what I believe will be the most consequential technological shift of our lifetimes - the moment AI goes from our screens to our streets. Robotics isn't just the next AI application; it's the next AI revolution. When AI systems can manipulate the physical world as fluently as they manipulate language, every cognitive bias, every training data artifact, every alignment failure gets amplified into material reality.

I’ve always been interested in robotics and I was even in a Human-Robot Interaction lab back in college in 2018. At the time, robotics was seen as cool but science-fiction, unlikely to bear big fruit soon.

Fast-forward to March 2025 and DeepMind released Gemini Robotics and the larger Gemini Robotics-ER. Built on Gemini 2.0 but trained end-to-end for physical manipulation, these models double-to-triple real-world success rates relative to last year’s systems.

A robot folds an origami fox because a VLA model interpreted a photo and a voice command. That’s not science fiction - that’s a DeepMind demo. But Gemini Robotics models aren’t LLMs per se - they’re VLAs (Vision Language Action models).

Large-language models (LLMs) deal in text-only: you give them words, they return words. Vision-language models (VLMs) add pixels to that loop, letting the system describe what it sees. Vision-Language-Action (VLA) models go one step further: they fuse vision and language and output a stream of low-level actions (or joint commands) that directly drive a robot.

LLMs collapsed the distance between thought → text. VLAs now collapse intention → physical action. My smart home already nudges my routines; a Gemini-class robotic agent will soon rearrange my apartment to hit efficiency targets I never set. Every bias in the training data - cultural, political, economic - can now manifest as literal force in the world. The cognitive leash is tightening around matter, not just around words.

Robotics is no longer “ten years away.” VLAs like RT-2 and Gemini Robotics show that general-purpose manipulation is arriving on an LLM-style curve - exponential and largely unseen by the public. The open question is who sets the reward functions and owns the off-switch when the leash starts tugging furniture, vehicles, and eventually cities.

The most dangerous part of AI and robotics isn't the technology itself: it's who controls it. Current AI systems aren't neutral mathematical entities. They're crystallized expressions of particular worldviews, trained on data curated by companies with specific incentives, fine-tuned according to values determined by small teams in Silicon Valley.

Most people are still debating whether ChatGPT really "understands" language while robots are learning to understand and reshape the physical world. The cognitive leash was always going to become physical. The question is whether we'll notice before it's finished rearranging the furniture.

The Practical Reality

Yes, the practical benefits are undeniable. As Ptacek notes, even the smartest skeptics are “doing work that LLMs already do better, out of spite.” Modern AI agents don’t just generate code - they handle the tedious work that makes up most programming tasks. The productivity gains are real and transformative.

But this efficiency comes with its own risks. When problem-solving becomes frictionless, when the distance between intention and implementation shrinks to nothing, we lose important spaces for reflection. The struggle to implement an idea often reveals flaws in the idea itself. What happens when that struggle disappears?

A User’s Guide to the Leash

So here’s my user’s guide to loving your cognitive leash:

- Feel for the edges: Regularly attempt tasks without AI. Notice what’s become difficult. That difficulty maps the shape of your leash. My phoneless walks are one way I do this - experiencing my own unaugmented thoughts, however slow or meandering they might be.

- Multiple handlers: Use different AI systems for different purposes. A single leash in a single hand is more dangerous than distributed dependencies. Plus, it lets you see what features one system has that another may not.

- Question the direction: When AI suggests a path, ask not just “is this correct?” but “why this direction?” Understanding the leash’s pull helps you distinguish guidance from control.

- Preserve the struggle: Some friction is protective. Maintain tasks you do without enhancement - not out of Luddism, but to preserve cognitive muscles that might atrophy.

- Love it honestly: Acknowledge both the enhancement and the constraint. The leash isn’t evil - it’s a tool. But tools shape their users as much as users shape their tools.

- Don't pretend it doesn't exist: A billion people use ChatGPT weekly because it genuinely helps them write, code, learn, and think better. As Masley notes, dismissing these real benefits as mass delusion isn't just wrong - it's condescending. The honest position acknowledges both the enhancement and the leash, respecting people's intelligence to understand what they're gaining and what they're risking.

The Path Forward

I remain enthusiastic about AI because the benefits are real and transformative. I’ve never been more effective at learning, creating, or problem-solving. The technology genuinely enhances human capability in ways that feel profound and democratizing.

But we need to be brutally honest about the dual-use nature of these capabilities. The same personalization that makes AI an incredible teacher makes it a perfect manipulator. The same optimization that enhances our thinking can be used to reshape our preferences. The same accessibility that democratizes education can democratize propaganda.

As Masley emphasizes, we need to move beyond tribal thinking about AI, beyond simple “pro” and “anti” positions. We need to acknowledge that AI can be simultaneously:

- An incredible cognitive enhancer AND a tool for manipulation

- A democratizing force AND a source of new inequalities

- A solution to human limitations AND a threat to human agency

This is why I support open-source AI development. Not because distributed systems are inherently safer, but because concentrated cognitive power feels more dangerous than distributed cognitive power. If everyone will have access to personalized AI systems, we need to ensure those systems serve human flourishing rather than corporate or state interests.

We need AI systems that enhance human agency rather than subtly undermining it. This means building systems that are transparent about their reasoning, that can be questioned and challenged, that respect user autonomy even when they could achieve better outcomes through manipulation.

Most importantly, we need to remain vigilant about our own enhancement. The most sophisticated AI systems will be the ones that help us think better while preserving our capacity for independent thought. The test isn’t whether these systems do what we want—it’s whether we remain capable of wanting things authentically.

Loving the Leash

The question isn’t whether to wear the cognitive leash because we’re already wearing it. The question is whether we can love it without losing ourselves to it. Whether we can accept both the enhancement and the constraint, the freedom and the tether, the dance and the choreography.

In the end, a user’s guide to loving your cognitive leash is really a guide to remaining human while becoming more than human.

I’ll keep wearing my leash. I’ll keep loving what it lets me do. But I’ll also keep testing its limits, feeling for its edges, remembering what it’s like to think unleashed, even if that thinking feels smaller, slower, more limited.

Because the final paradox is this: only by remembering our unleashed state can we truly appreciate and truly control our enhanced one.

Every morning, I choose to clip on the leash. Every evening, on my walks, I choose to take it off. In that daily practice of attachment and detachment lies whatever freedom we’ll salvage from this age of enhancement.

The leash is neither good nor evil. It’s what we make of it. And what it makes of us depends on whether we remember that we’re the ones who chose to wear it.