Your Context, Your Data, Your Rules: A Universal Memory Store

A quick note before we dive in: This is a deeply technical post for my friends who are already neck-deep in the AI space. If you're here for my fiction writing, I promise the next short story is coming - I'm just being a perfectionist about it. After "The Alignment" got way more attention than I expected, I want to make sure the next piece genuinely earns your time. So while that marinates, here's something different: a technical manifesto about a problem that's been driving me insane. This very much isn't really for a general audience - if you're not currently juggling five different AI tools and cursing having to re-explain the same problem in Cursor and Claude, maybe bookmark this for later. For everyone else - let's dive in.

Let's Be Honest. You're Drowning in Intelligence That Can't Remember Your Name.

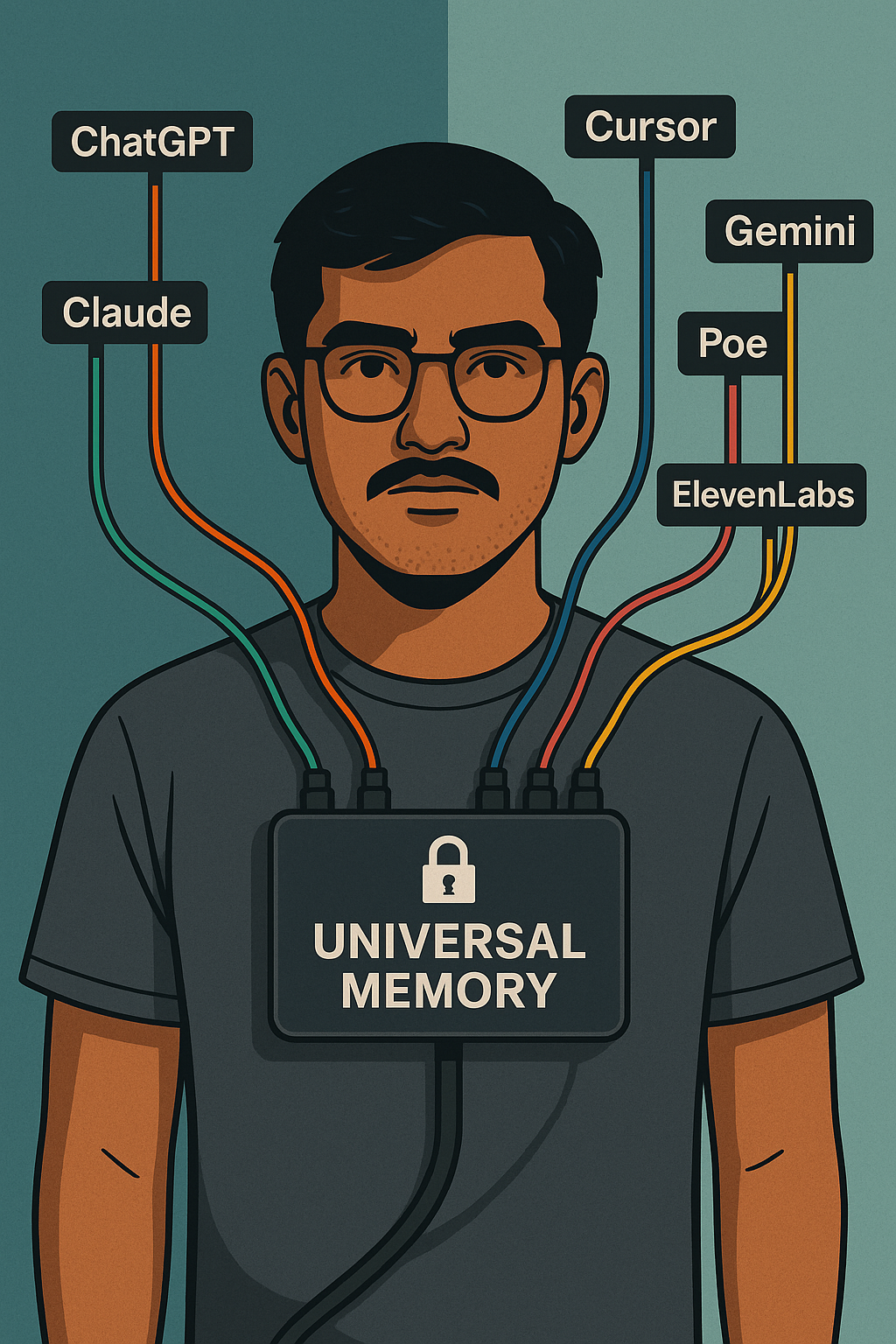

I have a problem. I use way too many AI tools. Cursor, Claude, Gemini, ChatGPT, ElevenLabs and now Dia. The list is embarrassing.

You'd think with all this "intelligence augmentation," my workflow would be frictionless. Instead, it's the opposite: If you use many AI tools, none of them really know you. At best, they know a fragment - a sliver of context.

This is the real AI crisis, the one happening not in a far-off sci-fi future, but right now, in the tedious, grinding reality of your workflow: context fragmentation. Every tool builds its own brittle, siloed effigy of "you." You are the ghost in a hundred machines, forced to reintroduce yourself at every turn. It's like being stuck in a never-ending series of intake interviews with amnesiac therapists, each one assuring you they "value your journey" before promptly forgetting it.

We were promised personal AI. The irony is that if you're an enthusiast, what you actually get is a graveyard of context.

The Solution: An Open Memory Palace, Not a Walled Garden

The fix is not another feature. It's infrastructure.

I'm talking about an Universal AI Memory Store: a user-owned, encrypted, and portable vault for your digital self. Not a "profile" that Google or OpenAI can scrape for ad-targeting, but a sovereign piece of data infrastructure that you control. One place where your preferences, history, tone, and active projects live, under your lock and key.

- When I tell Claude I'm a vegetarian, Gemini shouldn't suggest a steakhouse.

- When Cursor learns I prefer functional React components, ChatGPT should stop generating class-based boilerplate.

- When I specify "never summarize, always quote," every tool hears it - once.

The architecture is radically simple because it has to be. It's a centralized memory controlled by a decentralized user. Tools can read and write context, but only with explicit, granular permissions. No carte blanche. No "trust us" hand-waving. If you want to build an assistant for me, you integrate with my memory vault or you're just another amnesia machine.

If this sounds familiar to folks following the Fediverse scene - it should. Interoperability is the fight. The same way Bridgy Fed lets you follow someone from Mastodon to Bluesky and back, we need AI tools that don’t trap your context in silos. Your memory should move with you. We already know how to build bridges - ActivityPub, ATProtocol and BridgyFed itself show us the way. We just need to apply that thinking to your cognitive stack.

We're Already Wearing the Cognitive Leash

As I wrote in "A User's Guide to Loving Your Cognitive Leash," we're already tethered to these systems. The question is: who holds the handle? Right now, I have five different leashes, all yanking in different directions. My digital identity is a Frankenstein's monster of assumptions stitched together in proprietary data silos - a form of digital feudalism where we work the land but the platform lords own the harvest.

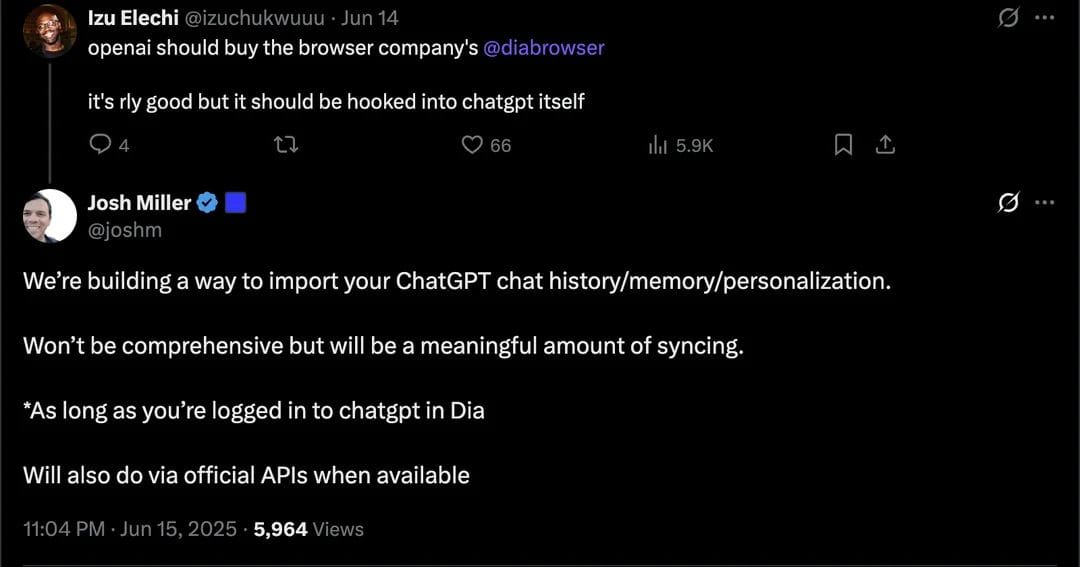

The Browser Company gets a piece of this. Josh Miller tweeted they're building ChatGPT memory import for Dia. But that's a one-way street, a bandage on a severed limb. We don't need data import; we need bidirectional, universal sync. A protocol, not a feature.

The Technical Reality (The Building Blocks Are Already Here)

This isn't science fiction. We have everything we need:

Model Context Protocol (MCP) - The reason everyone's talking about MCP right now is because it's the first real attempt to solve the fundamental problem of AI isolation. It's literally designed for AI-to-external-system communication. It has dynamic self-discovery - AI models can discover and use MCP-defined tools at runtime. Your AI doesn't need to be pre-programmed for every integration; it can find and use new capabilities on the fly. The killer? MCP servers can BE memory servers. Instead of each AI tool building its own memory system, they can all connect to YOUR memory server via MCP. One protocol, infinite memory clients. These agents can read and write to this store using MCP with guardrails you can define and refine.

Vector Databases - For turning three months of scattered conversations into a searchable semantic history. Your "I hate cheese" from a ChatGPT conversation should inform Claude's recipe suggestions today.

Versioning (Git for the Self) - Track, audit, and roll back changes to your digital persona if an AI misunderstands and pollutes your profile.

Here's what it looks like in practice:

# Morning Brainstorm (ChatGPT)

"I'm building a React app for tracking workout routines"

"I prefer functional components and Tailwind CSS"

"The target audience is serious athletes"

# Afternoon Coding (Cursor) - Automatically knows:

- Suggests athletic-focused variable names

- Defaults to functional React patterns

- Applies Tailwind classes without asking

# Evening Documentation (Claude) - Already understands:

- Technical level (React proficient)

- Project context (fitness app)

- Writing style (direct, no fluff)The Inevitable Hard Parts (Let's Not Pretend)

Privacy & Sovereignty: A unified memory is a single, massive target. The only solution is radical user control: end-to-end encryption with keys that only the user holds. If I can't self-host it, I can't trust it. Anything less is security theater designed for a surveillance honeypot.

Standardization: Herding the AI giants into an open protocol will be a blood sport. Their moats are built from the walls of your fragmented context. OpenAI wants their walled garden. Google wants theirs. Forcing their hand will require a killer app or a user rebellion.

Conflict Resolution: What happens when ChatGPT says I love Python and Claude says I love JavaScript? We need intelligent conflict resolution - a system of lenses, profiles, or weighted context, with you as the final arbiter.

Abuse Potential: If done wrong, this is every authoritarian's dream. The architecture must be hostile to centralized control by design.

The Call to Arms

Right now, the user is the API. The user is the integration layer. The user is the glue, doing the manual, repetitive, cognitive labor that the "intelligence augmentation" tools were supposed to eliminate.

We solved this for password managers. We can solve it for AI. But only if we put the user and NOT the vendor at the center.

We need to start building the ecosystem that gives users a memory. We need:

- An open protocol for memory interchange (MCP is a start)

- A self-hostable reference implementation that's genuinely user-controlled

- Encryption by default with user-owned keys

- Granular permissions as a non-negotiable core feature

- The absolute right to export everything (my data, my rules)

The first company to nail this won't just own the next interaction layer of AI. They will be the first to treat users not as the product to be mined, but as the agents to be empowered.

I don't want a hundred AI relationships. I want one memory and infinite tools that respect me and my privacy and my autonomy.

I'm tired of being the glue. I want my tools to work for me, not the other way around.

In the end - interoperability isn’t just a technical preference. It’s a political stance. It’s a commitment to a world where users are not captive to platforms, where your data, identity, and intelligence aren’t locked behind APIs you don’t control. Standards are power. Protocols are freedom. Whether it’s your social graph or your AI memory, the choice is the same: walled gardens that don't truly empower you, or an open ecosystem that remembers who you are on your terms. If we want tools that truly serve us, they have to speak a common language - and that language has to be ours. So which of you is going to build it?